Practical Guide to Writing Effective Prompts and Getting the Most Out of Generative AI

When you interact with a generative AI (like ChatGPT, Claude, Gemini, or Perplexity), you’re not coding—you’re talking. You’re writing a request in natural language, and that makes the way you phrase your question absolutely crucial for the quality of the result. This natural interaction is often called vibe-coding—a creative process where, instead of relying on technical syntax, you describe what you want to achieve and let the AI turn your ideas into concrete outputs.

_The Universal Recipe for the Perfect Prompt

To design effective prompts, it helps to know the basic structure and how its key elements interact.

All generative models—from OpenAI to Anthropic—perform better when you follow this simple three-part structure:

| Element | What to Include | Why It Matters |

|---|---|---|

| Instruction | A clear request, like the title of a task. | Immediately focuses the output on your goal. |

| Context | Information, data, tone, role, constraints. | Helps the model “understand” what you want and what to avoid. |

| Format | Specify how the answer should be delivered. | Makes the output more structured and usable. |

Practical Example:

- Instruction: Analyze the data in this table and suggest three optimization strategies.

- Context: The table shows performance metrics of a web app over the last 8 weeks.

- Format: Respond with three bullet points, each with a short technical explanation.

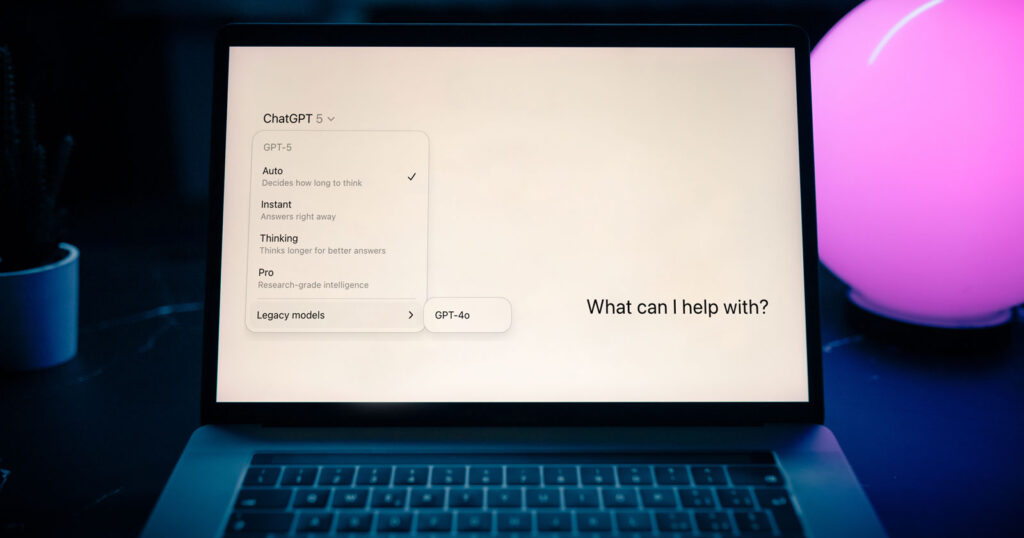

_Choose the Right Model… or Let the AI Do It for You

Until version 4, picking the right model in ChatGPT was critical—each had specific strengths, and choosing accordingly made a big difference.

With GPT-5, things changed: ChatGPT now automatically selects the most suitable engine (model or internal mode) for your request.

Your prompt is routed through two main operating modes:

- Chat → fast, fluid, ideal for quick tasks and general interactions.

- Thinking → slower, but with deep reasoning—perfect for complex analysis, coding, and multi-step problems.

You can still try nudging the system by specifying a mode in your prompt, but most of the time the AI decides on its own.

Where Manual Selection Still Matters

If you’re using tools like Claude, Claude Code, or Perplexity, choosing the model is still up to you. In those cases, you can prioritize speed, accuracy, or reasoning depth—just like ChatGPT before GPT-5.

Alternatively, via APIs (e.g., OpenAI API or equivalents), you can still specify the exact model, along with parameters such as temperature, max tokens, or reasoning mode. This is especially useful when integrating AI into custom apps or automated workflows.

Why the Prompt Now Matters More Than Ever

With GPT-5, the prompt itself is your main lever to guide which engine the AI uses:

- Define complexity and expected output (“Explain step by step”, “Detailed analysis with citations”, “Brief structured summary”).

- Provide clear context so the system knows whether deep reasoning or quick answers are required.

- Use explicit mode instructions (“Answer in deep reasoning mode” or “Give a fast, concise response”).

Good prompt engineering not only improves response quality—it steers the AI toward the best mode, even if you can’t manually select GPT-4, GPT-4o, or O-series anymore.

_10 Evergreen Tips (and Why They Work)

- Accuracy is the real strength Vague, contradictory, or unstructured prompts reduce quality: the model wastes effort reconciling incompatible instructions, producing incoherent or overly generic outputs.

- Write the instruction first, then the rest AI works top-down. Example: ✅ “Analyze these performance metrics and suggest improvements.” ❌ Starting with raw data without saying what to do with them.

- Be specific (without overloading) Indicate audience, tone, length, and desired output. Example:

“Write an abstract of no more than 150 words, with a formal tone for a developer audience.” - Show an example: one-shot or few-shot

- One-shot: a single example.

- Few-shot: 2–3 similar examples. Example:

“Here’s a sample summary. Apply the same style to the following text.”

- Start simple, then refine with constraints Begin with: “Write a concise list.” Then add: “in an ironic tone” or “for a developer audience” as needed.

- Avoid vague requests

- ❌ “Write something short.”

- ✅ “Maximum 100 words, use concrete examples, keep it conversational.”

- Say what you want, not what to avoid

- ❌ “Don’t be generic.”

- ✅ “Focus on numerical results and verifiable data.”

- Use technical guide words Examples:

- “Start with: Title – then bullet list.”

- “Return as valid JSON.”

- “Write in Markdown.”

- Tune parameters (if using API or Playground)

- Low temperature (e.g., 0.2) → more reliable responses. Useful for analysis and fact-checking. Via API, you can also:

- Specify the model (e.g.,

gpt-4o-mini,gpt-4.5-turbo) with providers that allow it. - Set length limits (

max_tokens) to control output size. - Define style and tone through persistent system prompts. This lets you integrate AI into apps or automations while controlling speed, cost, and quality.

- Review your prompts regularly Test them monthly. Update references, examples, and constraints. A prompt that works today might not tomorrow.

_5 Prompt Patterns You Can Use Today

Recurring prompt patterns you can use—even if you don’t write a single line of code:

- Role-play Ask the AI to behave like a specific expert. Example:

*“Pretend to be a Data Engineer: explain these results to a CTO.”* - Checklist Great for reviews or compliance checks. Example:

“Does this landing page follow UX best practices? Reply with a ✅/❌ table.” - Chain-of-thought (Step-by-step reasoning) Useful for solving complex problems. Example:

*“Think step by step before answering. Explain every stage of your reasoning.”* - Self-critique Makes the output more reliable. Example:

*“After your response, list three possible errors or limitations in your own analysis.”* - Multimodal Combine text with images if the model supports it. Example:

*“Look at this screenshot. Identify 3 UX issues and explain each with examples.”*

_From Prompt to Tool in 5 Steps

- Write a first draft of the prompt Follow the structure: Instruction + Context + Format.

- Test it on real cases Try it with 3–5 real use cases. If it doesn’t work, note what’s missing or ambiguous.

- Optimize Add examples, narrow down the context, clarify constraints.

- Automate Use the prompt in APIs, no-code platforms, or automation workflows.

- Monitor and update Review it periodically, adapting to new scenarios and the latest model updates.

A well-designed prompt can evolve into a reusable tool—for content creation, daily support tasks, or speeding up repetitive analysis.

_Quick Schema to Write Prompts that Work

Instruction:

[Clear and direct request]

Context:

[Background info, data, reference examples]

Format:

[Desired output: list, table, code, markdown, JSON…]

Notes:

[Tone, constraints, language, target audience]

Think of it as a mini technical spec: the clearer you are, the better the AI will respond.

_Conclusion

Prompt engineering isn’t an art for the few – it’s a strategic skill anyone can learn with the right method. At Fyonda, we apply these principles daily: designing scalable prompts, testing them in real environments, and integrating them into no-code tools, APIs, and operational flows.

With the right approach, AI becomes your everyday ally – for writing, designing, analyzing, and optimizing your work.